Overview

Create accountability for your AI models using our toolkits for Intel® Distribution of OpenVINO™ toolkit and TensorFlow* models. Learn how to deploy Intel® Explainable AI Tools software tools for OpenVINO™ and TensorFlow* models on Microsoft* Windows* and Linux*.

- Recommended Hardware: Intel® Core™ i5 processor or above with 16 GB of RAM

- Operating System: Ubuntu* 20.04 LTS, or Microsoft* Windows* 10

How It Works

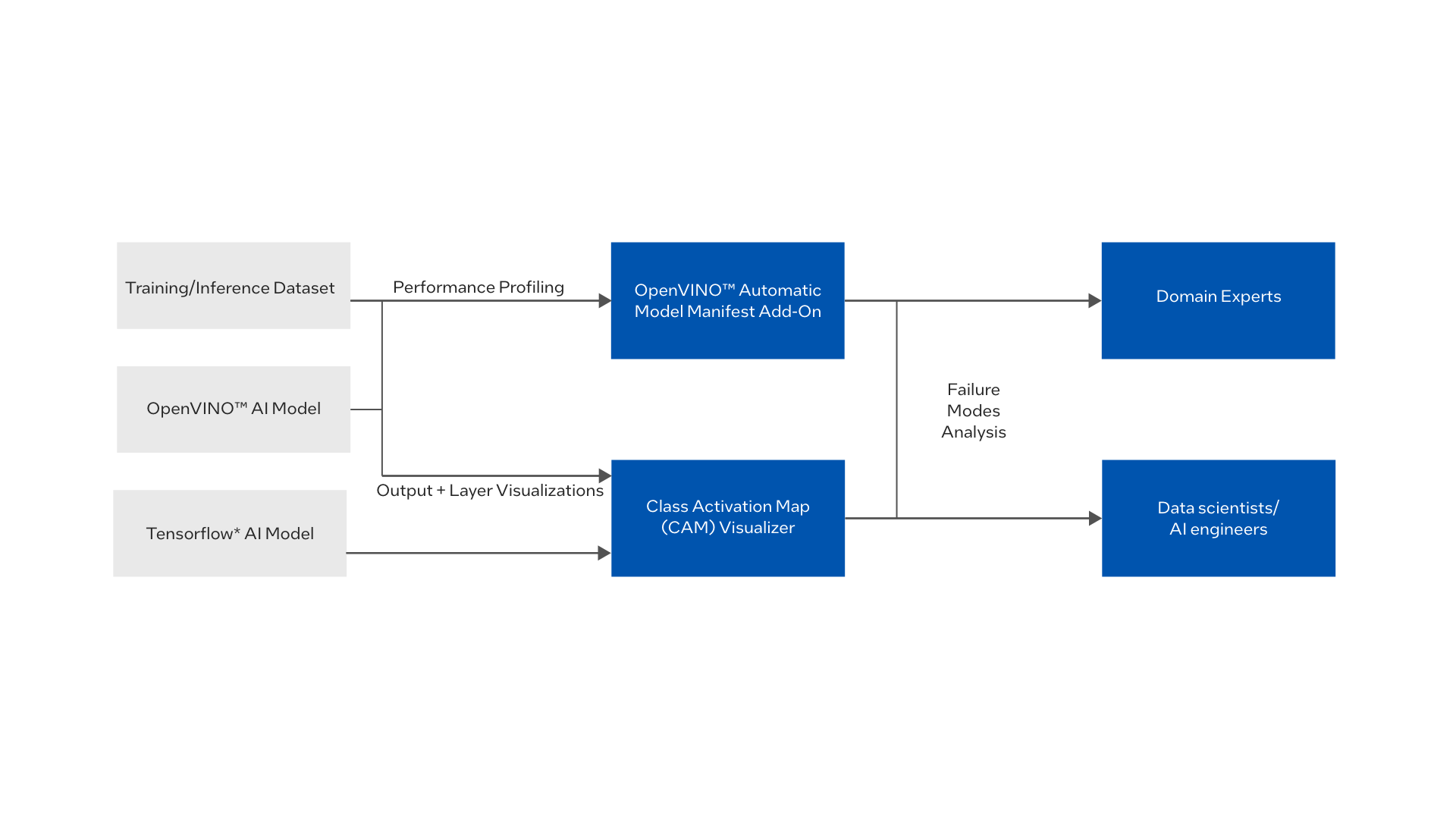

The Intel® Explainable AI Tools offered in the Developer Catalog are software toolkits that can be used through a no-code GUI, and as a lightweight API that can be directly embedded into other programs/AI workflows, such as other Edge Software Hub reference implementations.

The resulting visualizations and reports can be used to:

- Enable better comprehensibility and insight into AI models’ reliability for user trust.

- Help users compare performance between different models and identify improvements for inference and training processes.

Deploy Explainable AI Software Tools

This section provides links to documentation about how to deploy specific Intel® Explainable AI Tools, compatible with OpenVINO™ version 2022.1. To learn more about the individual software toolkits, see the Learn More section.

CAM-Visualizer: Class Activation Map Visualization Toolkit

CAM-Visualizer provides a GUI based OS independent app for OpenVINO™ and Tensorflow classification models that allows users to view feature activation and class activation maps, given an input image, up to an individual layer level in an intuitive and interactive GUI mode. Future releases of the solution will support the latest OpenVINO™ version.

OpenVINO™ Automatic Model Manifest Add-On

OpenVINO™ Automatic Model Manifest Add-On enables automated quantitative analysis, performance profiling for OpenVINO™ computer vision models. The product generates reports for documenting the failure modes of AI computer vision models, performance profiling, and data quality and explanation metrics, in the format of a model card. For OpenVINO™ models, the tool consumes output information and metadata from the OpenVINO™ Accuracy Checker tool to generate a report that is more visually comprehensible and filter information relevant from an ethical and AI observability analysis perspective. Future releases of the solution will incorporate Tensorflow support and automatic metric comparisons between multiple models, and support the latest OpenVINO™ version.

Intel® Explainable AI Tools - Model Card Generator

This open-source Python module allows users to create interactive HTML reports for both TensorFlow and PyTorch models containing model details and quantitative analysis that displays performance and fairness metrics across groups. These model cards can be part of a traditional end-to-end platform for deploying ML pipelines for tabular, image and text data to promote transparency, fairness, and accountability.

Intel® Explainable AI Tools - Explainer

This open-source project allows users to run post-hoc model distillation and visualization methods to examine predictive behavior for both TensorFlow and PyTorch models via a simple Python API. This includes the following modules:

- Attributions: visualize negative and positive attributions of tabular features, pixels, and word tokens for predictions

- Grad CAM: create heatmaps for CNN image classifications using gradient-weight class activation mapping

- Metrics: Gain insight into models with the measurements and visualizations needed during the machine learning workflow

Summary and Next Steps

You have successfully deployed the Intel® Explainable AI Tools in the Edge Software Hub Developer Catalog!

As a next step, try the software toolkits with other reference implementations.

Learn More

Learn more about the individual software solutions:

Support Forum

If you're unable to resolve your issues, contact the Support Forum.