Overview

Powered by Open Visual Cloud. The Immersive Video Sample includes two samples based on different streaming frameworks:

- Omnidirectional Media Format (OMAF) sample: Based on OMAF standard. Uses MPEG DASH as the protocol to deliver tiled 360 video stream. Can support both VOD and live streaming mode.

- WebRTC (real-time communications) sample: Enables tiled 360 video streaming based on WebRTC protocol and Open WebRTC Toolkit media server framework for low-latency streaming for immersive 360 video.

Select Configure & Download to download the sample and the software listed below.

- Time to Complete: 1 hour

- Programming Language: C/C++, JavaScript*, Java*

- Available Software:

- WebRTC

- FFmpeg

- NGINX

Target System Requirements

- Linux* 64 bit Operating Systems

- Recommended OS for Client: CentOS* 7.6 or Ubuntu* 18.04 Server LTS

- Recommended OS for Server: CentOS 7.6

- Disk Space needed: 10 GB (Source code: 275 MB, Docker* Images: ~10 GB)

How It Works

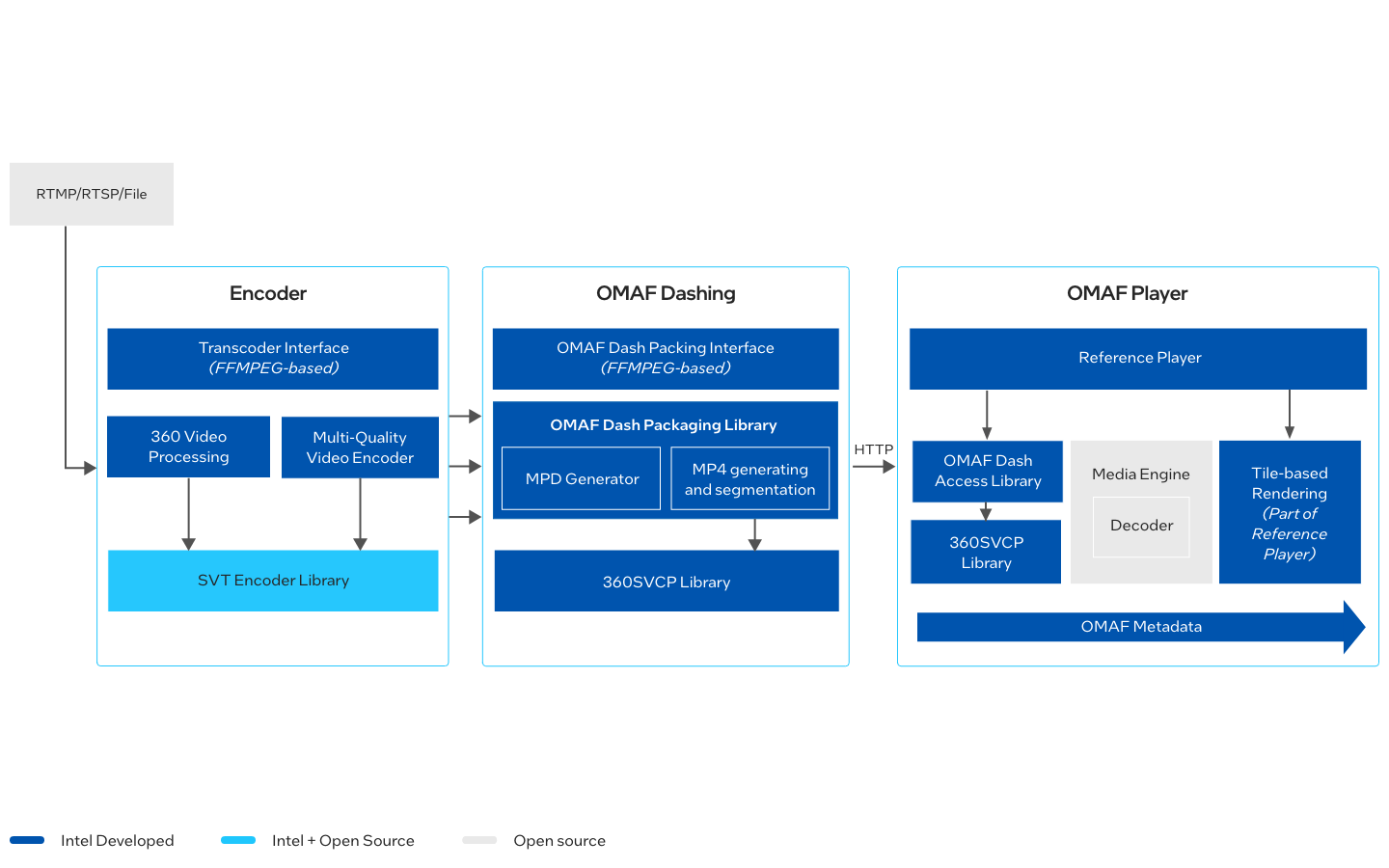

OMAF Sample

The OMAF Sample provides a quick trial to setup E2E OMAF-Compliant 360 video streaming. OMAF 360 Video streaming sample can support both VOD and Live streaming for 4K and 8K contents. OMAF sample can be deployed with Kubernetes* or directly with Docker* image.

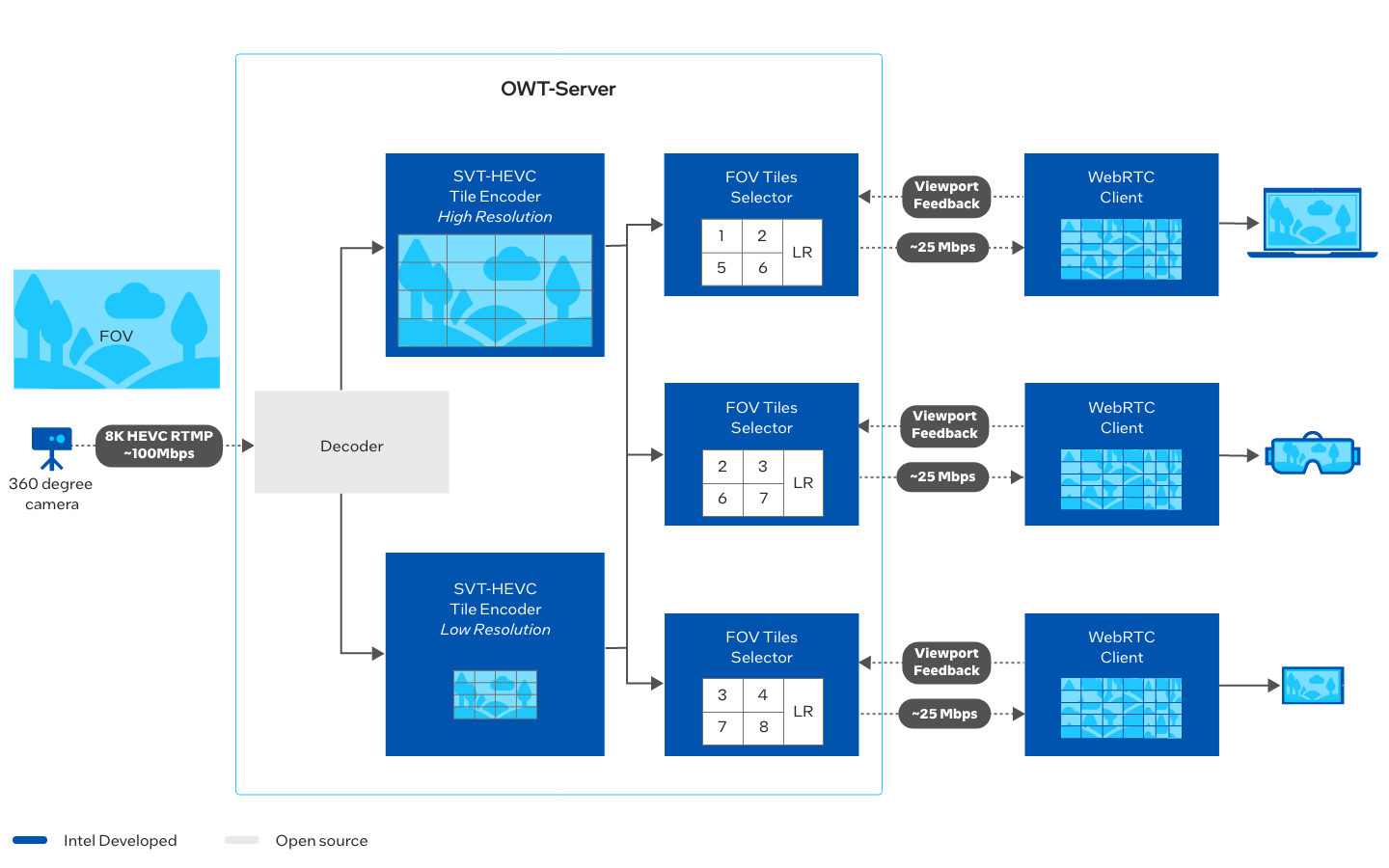

WebRTC Sample

The WebRTC Sample provides a low latency end-to-end 360 video streaming service, based on the WebRTC technology and Open WebRTC Toolkit (OWT) media server. It supports 4K and 8K tile-based transcoding powered by SVT-HEVC, and bandwidth efficient FoV(Field of view) based adaptive streaming.

Get Started

Install Immersive Video Sample

Select Configure & Download to download the Immersive Video sample.

OMAF Sample

Follow the steps on GitHub to build the OMAF-based sample.

- Get Started

- Install and deploy (Docker)

- Install and deploy (Kubernetes)

WebRTC Sample

Follow the steps on GitHub to build the WebRTC-based sample.

- Install and run (server side)

- Install and run (client side)

Learn More

To continue learning, see the following guides and software resources: